Batch Processing with Spring Batch

In this guide, we develop a Spring Batch application and deploy it to Cloud Foundry, Kubernetes, and your local machine. In another guide, we deploy the Spring Batch application by using Data Flow.

This guide describes how to build this application from scratch. If you prefer, you can download a zip file that contains the sources for the billsetup application, unzip it, and proceed to the deployment step.

You can download the project from your browser or run the following command to download it from the command-line:

wget "https://github.com/spring-cloud/spring-cloud-dataflow-samples/blob/main/dataflow-website/batch-developer-guides/batch/batchsamples/dist/batchsamples.zip?raw=true" -O batchsamples.zipDevelopment

We start from Spring Initializr and create a Spring Batch application.

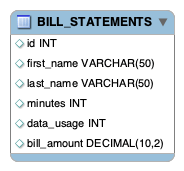

Suppose a cell phone data provider needs to create billing statements for customers. The usage data is stored in JSON files that are stored on the file system. The billing solution must pull data from these files, generate the billing data from this usage data, and store it in a BILL_STATEMENTS table.

We could implement this entire solution in a single Spring Boot Application that uses Spring Batch. However, for this example, we break up the solution into two phases:

billsetuptask: Thebillsetuptaskapplication is a Spring Boot application that uses Spring Cloud Task to create theBILL_STATEMENTStable.billrun: Thebillrunapplication is a Spring Boot application that uses Spring Cloud Task and Spring Batch to read usage data and price for each row from a JSON file and put the resulting data into theBILL_STATEMENTStable.

For this section, we create a Spring Cloud Task and Spring Batch billrun application that reads usage information from a JSON file that contains customer usage data and price for each entry and places the result into the BILL_STATEMENTS table.

The following image shows the BILL_STATEMENTS table:

Introducing Spring Batch

Spring Batch is a lightweight, comprehensive batch framework designed to enable the development of robust batch applications. Spring Batch provides reusable functions that are essential in processing large volumes of records by offering features such as:

- Logging/tracing

- Chunk based processing

- Declarative I/O

- Start/Stop/Restart

- Retry/Skip

- Resource management

It also provides more advanced technical services and features that enable extremely high-volume and high-performance batch jobs through optimization and partitioning techniques.

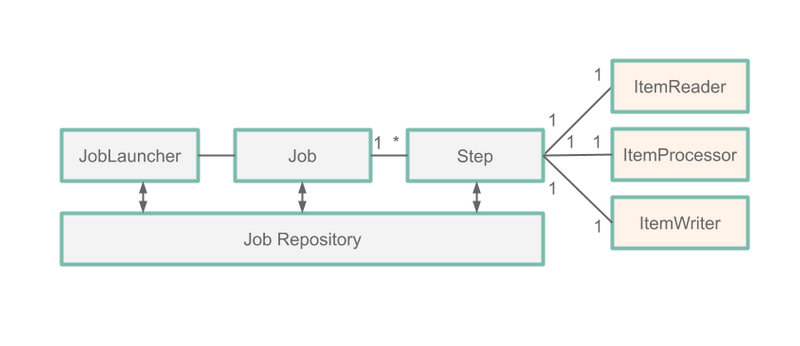

For this guide, we focus on five Spring Batch components, which the following image shows:

Job: AJobis an entity that encapsulates an entire batch process. A job is comprised of one or moresteps.Step: AStepis a domain object that encapsulates an independent, sequential phase of a batch job. Eachstepis comprised of anItemReader, anItemProcessor, and anItemWriter.ItemReader:ItemReaderis an abstraction that represents the retrieval of input for aStep, one item at a time.ItemProcessor:ItemProcessoris an abstraction that represents the business processing of an item.ItemWriter:ItemWriteris an abstraction that represents the output of aStep.

In the preceding diagram, we see that each phase of the JobExecution is stored in a JobRepository (in this case, our MariaDB database). This means that each action performed by Spring Batch is recorded in a database for both logging purposes and for restarting a job.

NOTE: You can read more about this process here.

Our Batch Job

So, for our application, we have a BillRun Job that has one Step, which consists of:

JsonItemReader: AnItemReaderthat reads a JSON file containing the usage data.BillProcessor: AnItemProcessorthat generates a price based on each row of data sent from theJsonItemReader.JdbcBatchItemWriter: AnItemWriterthat writes the pricedBillrecord to theBILLING_STATEMENTtable.

Initializr

Follow these steps to create the app:

- Visit the Spring Initializr site.

- Select the latest

2.7.xrelease of Spring Boot. - Create a new Maven project with a Group name of

io.springand an Artifact name ofbillrun. - In the Dependencies text box, type

taskto select the Cloud Task dependency. - In the Dependencies text box, type

jdbcand then select the JDBC API dependency. - In the Dependencies text box, type

h2and then select the H2 dependency. We use H2 for unit testing. - In the Dependencies text box, type

mariadband then select the MariaDB dependency (or your favorite database). We use MariaDB for the runtime database. - In the Dependencies text box, type

batchand then select Batch. - Click the Generate Project button.

- Unzip the

billrun.zipfile and import the project into your favorite IDE.

Setting up MariaDB

Follow these instructions to run a MariaDB Docker image for this example:

-

Pull a MariaDB Docker image by running the following command:

docker pull mariadb:10.4.22 -

Start MariaDB by running the following command:

docker run -p 3306:3306 --name mariadb -e MARIADB_ROOT_PASSWORD=password -e MARIADB_DATABASE=task -d mariadb:10.4.22

Building The Application

-

Download the Sample Usage Info via

wgetand copy the resulting file to the/src/main/resourcesdirectory.wget https://raw.githubusercontent.com/spring-cloud/spring-cloud-dataflow-samples/main/dataflow-website/batch-developer-guides/batch/batchsamples/billrun/src/main/resources/usageinfo.json -

Download the Sample Schema via

wgetand copy the resulting file to the/src/main/resourcesdirectory.wget https://raw.githubusercontent.com/spring-cloud/spring-cloud-dataflow-samples/main/dataflow-website/batch-developer-guides/batch/batchsamples/billrun/src/main/resources/schema.sql - In your favorite IDE, create the

io.spring.billrun.modelpackage. - Create a

Usageclass in theio.spring.billrun.modelthat looks like the contents of Usage.java. - Create a

Billclass in theio.spring.billrun.modelthat looks like the contents of Bill.java. - In your favorite IDE, create the

io.spring.billrun.configurationpackage. -

Create an

ItemProcessorfor pricing eachUsagerecord. To do so, create aBillProcessorclass in theio.spring.billrun.configurationthat looks like the following listing:public class BillProcessor implements ItemProcessor<Usage, Bill> { @Override public Bill process(Usage usage) { Double billAmount = usage.getDataUsage() * .001 + usage.getMinutes() * .01; return new Bill(usage.getId(), usage.getFirstName(), usage.getLastName(), usage.getDataUsage(), usage.getMinutes(), billAmount); } }Notice that we implement the

ItemProcessorinterface that has theprocessmethod that we need to override. Our parameter is aUsageobject, and the return value is of typeBill. -

Now we can create a Java configuration that specifies the beans required for the

BillRunJob. In this case, we need to create aBillingConfigurationclass in theio.spring.billrun.configurationpackage that looks like the following listing:@Configuration @EnableTask @EnableBatchProcessing public class BillingConfiguration { @Autowired public JobBuilderFactory jobBuilderFactory; @Autowired public StepBuilderFactory stepBuilderFactory; @Value("${usage.file.name:classpath:usageinfo.json}") private Resource usageResource; @Bean public Job job1(ItemReader<Usage> reader, ItemProcessor<Usage,Bill> itemProcessor, ItemWriter<Bill> writer) { Step step = stepBuilderFactory.get("BillProcessing") .<Usage, Bill>chunk(1) .reader(reader) .processor(itemProcessor) .writer(writer) .build(); return jobBuilderFactory.get("BillJob") .incrementer(new RunIdIncrementer()) .start(step) .build(); } @Bean public JsonItemReader<Usage> jsonItemReader() { ObjectMapper objectMapper = new ObjectMapper(); JacksonJsonObjectReader<Usage> jsonObjectReader = new JacksonJsonObjectReader<>(Usage.class); jsonObjectReader.setMapper(objectMapper); return new JsonItemReaderBuilder<Usage>() .jsonObjectReader(jsonObjectReader) .resource(usageResource) .name("UsageJsonItemReader") .build(); } @Bean public ItemWriter<Bill> jdbcBillWriter(DataSource dataSource) { JdbcBatchItemWriter<Bill> writer = new JdbcBatchItemWriterBuilder<Bill>() .beanMapped() .dataSource(dataSource) .sql("INSERT INTO BILL_STATEMENTS (id, first_name, " + "last_name, minutes, data_usage,bill_amount) VALUES " + "(:id, :firstName, :lastName, :minutes, :dataUsage, " + ":billAmount)") .build(); return writer; } @Bean ItemProcessor<Usage, Bill> billProcessor() { return new BillProcessor(); } }The

@EnableBatchProcessingannotation in Boot 2.7.x enables Spring Batch features and provides a base configuration for setting up batch jobs. This is not required for Spring Boot 3.x+. The@EnableTaskannotation sets up aTaskRepository, which stores information about the task execution (such as the start and end times of the task and the exit code). In the preceding configuration, we see that ourItemReaderbean is an instance ofJsonItemReader. TheJsonItemReaderinstance reads the contents of a resource and unmarshalls the JSON data intoUsageobjects.JsonItemReaderis one of theItemReaderimplementations provided by Spring Batch. We also see that ourItemWriterbean is an instance ofJdbcBatchItemWriter. TheJdbcBatchItemWriterinstance writes the results to our database.JdbcBatchItemWriteris one of theItemWriterimplementations provided by Spring Batch. TheItemProcessoris our very ownBillProcessor. Notice that all the beans that use Spring Batch-provided classes (Job,Step,ItemReader,ItemWriter) are being built with builders provided by Spring Batch, which means less coding.

Testing

Now that we have written our code, it is time to write our test. In this case, we want to make sure that the bill information has been properly inserted into the BILLING_STATEMENTS table.

To create your test, update BillrunApplicationTests.java such that it looks like the following listing:

package io.spring.billrun;

import io.spring.billrun.model.Bill;

import org.junit.jupiter.api.BeforeEach;

import org.junit.jupiter.api.Test;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.jdbc.core.JdbcTemplate;

import javax.sql.DataSource;

import java.util.List;

import static org.assertj.core.api.Assertions.assertThat;

@SpringBootTest

public class BillRunApplicationTests {

@Autowired

private DataSource dataSource;

private JdbcTemplate jdbcTemplate;

@BeforeEach

public void setup() {

this.jdbcTemplate = new JdbcTemplate(this.dataSource);

}

@Test

public void testJobResults() {

List<Bill> billStatements = this.jdbcTemplate.query("select id, " +

"first_name, last_name, minutes, data_usage, bill_amount " +

"FROM bill_statements ORDER BY id",

(rs, rowNum) -> new Bill(rs.getLong("id"),

rs.getString("FIRST_NAME"), rs.getString("LAST_NAME"),

rs.getLong("DATA_USAGE"), rs.getLong("MINUTES"),

rs.getDouble("bill_amount")));

assertThat(billStatements.size()).isEqualTo(5);

Bill billStatement = billStatements.get(0);

assertThat(billStatement.getBillAmount()).isEqualTo(6.0);

assertThat(billStatement.getFirstName()).isEqualTo("jane");

assertThat(billStatement.getLastName()).isEqualTo("doe");

assertThat(billStatement.getId()).isEqualTo(1);

assertThat(billStatement.getMinutes()).isEqualTo(500);

assertThat(billStatement.getDataUsage()).isEqualTo(1000);

}

}For this test, we use JdbcTemplate to execute a query and retrieve the results of the billrun. Once the query has run, we verify that the data in the first row of the table is what we expect.

Deployment

In this section, we deploy to a local machine, Cloud Foundry, and Kubernetes.

Local

Now we can build the project.

- From a command line, change directory to the location of your project and build the project by running the following Maven command:

./mvnw clean package. -

Run the application with the configurations required to process the usage information in the database.

To configure the

billrunapplication, use the following arguments:spring.datasource.url: Set the URL to your database instance. In the following sample, we connect to a MariaDBtaskdatabase on our local machine at port 3306.spring.datasource.username: The user name to be used for the MariaDB database. In the following sample, it isroot.spring.datasource.password: The password to be used for the MariaDB database. In the following sample, it ispassword.spring.datasource.driverClassName: The driver to use to connect to the MariaDB database. In the following sample, it isorg.mariadb.jdbc.Driver.spring.datasource.initialization-mode: Initializes the database with theBILL_STATEMENTSandBILL_USAGEtables required for this application. In the following sample, we state that wealwayswant to do this. Doing so does not overwrite the tables if they already exist.spring.batch.initialize-schema: Initializes the database with the tables required for Spring Batch. In the following sample, we state that wealwayswant to do this. Doing so does not overwrite the tables if they already exist.

java -jar target/billrun-0.0.1-SNAPSHOT.jar \ --spring.datasource.url="jdbc:mariadb://localhost:3306/task?useSSL=false" \ --spring.datasource.username=root \ --spring.datasource.password=password \ --spring.datasource.driverClassName=org.mariadb.jdbc.Driver \ --spring.sql.init.mode=always \ --spring.batch.jdbc.initialize-schema=always - Log in to the

mariadbcontainer to query theBILL_STATEMENTStable. To do so, run the following commands:

docker exec -it mariadb bash -l

# mariadb -u root -ppassword

MariaDB> select * from task.BILL_STATEMENTS;

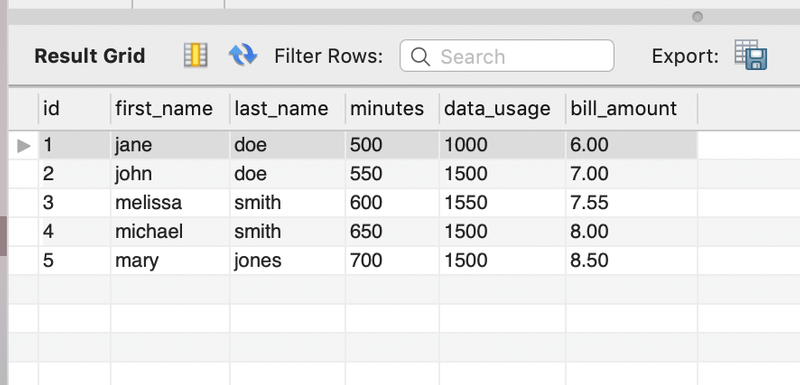

The output should look something like the following:

| id | first_name | last_name | minutes | data_usage | bill_amount |

|---|---|---|---|---|---|

| 1 | jane | doe | 500 | 1000 | 6.00 |

| 2 | john | doe | 550 | 1500 | 7.00 |

| 3 | melissa | smith | 600 | 1550 | 7.55 |

| 4 | michael | smith | 650 | 1500 | 8.00 |

| 5 | mary | jones | 700 | 1500 | 8.50 |

Cleanup

To stop and remove the MariaDB container running in the Docker instance, run the following commands:

docker stop mariadb

docker rm mariadbCloud Foundry

This guide walks through how to deploy and run simple Spring Batch standalone applications on Cloud Foundry.

Requirements

On your local machine, you need to have installed:

- Java

- Git

- The Cloud Foundry command line interface (see the documentation).

Building the Application

Now you can build the project.

From a command line, change directory to the location of your project and build the project by using the following Maven command: ./mvnw clean package.

Setting up Cloud Foundry

First, you need a Cloud Foundry account. You can create a free account by using Pivotal Web Services (PWS). We use PWS for this example. If you use a different provider, your experience may differ slightly from this document.

To get started, log into Cloud Foundry by using the Cloud Foundry command line interface:

cf loginYou can also target specific Cloud Foundry instances with the -a flag -- for example cf login -a https://api.run.pivotal.io.

Before you can push the application, you must set up the MySQL Service on Cloud Foundry. You can check what services are available by running the following command:

cf marketplaceOn Pivotal Web Services (PWS), you should be able to use the following command to install the MySQL service:

cf create-service cleardb spark task-example-mysqlMake sure you name your MySQL service is task-example-mysql. We use that value throughout this document.

Task Concepts in Cloud Foundry

To provide configuration parameters for Cloud Foundry, we create dedicated manifest YAML files for each application. For additional information on setting up a manifest, see the Cloud Foundry documentation

Running tasks on Cloud Foundry is a two-stage process. Before you can actually run any tasks, you need to first push an app that is staged without any running instances. We provide the following common properties to the manifest YAML file to each application:

memory: 32M

health-check-type: process

no-route: true

instances: 0The key is to set the instances property to 0. Doing so ensures that the app is staged without being run. We also do not need a route to be created and can set no-route to true.

Having this app staged but not running has a second advantage as well. Not only do we need this staged application to run a task in a subsequent step, but, if your database service is internal (part of your Cloud Foundry instance), we can use this application to establish an SSH tunnel to the associated MySQL database service to see the persisted data. We go into the details for that a little bit later in this document.

Running billrun on Cloud Foundry

Now we can deploy and run the second task application. To deploy, create a file called manifest-billrun.yml with the following content:

applications:

- name: billrun

memory: 32M

health-check-type: process

no-route: true

instances: 0

disk_quota: 1G

timeout: 180

buildpacks:

- java_buildpack

path: target/billrun-0.0.1-SNAPSHOT.jar

services:

- task-example-mysqlNow run cf push -f ./manifest-billrun.yml. Doing so stages the application. We are now ready to run the task by running the following command:

cf run-task billrun ".java-buildpack/open_jdk_jre/bin/java org.springframework.boot.loader.JarLauncher arg1" --name billrun-taskDoing so should produce output similar to the following:

Task has been submitted successfully for execution.

task name: billrun-task

task id: 1If we verify the task on the Cloud Foundry dashboard, we should see that the task successfully ran. But how do we verify that the task application successfully populated the database table? We do that next.

Validating the Database Results

You have multiple options for how to access a database in Cloud Foundry, depending on your individual Cloud Foundry environment. We deal with the following two options:

- Using local tools (through SSH or an external database provider)

- Using a database GUI deployed to Cloud Foundry

Using Local Tools (MySQLWorkbench)

First, we need to create a key for a service instance by using the cf create-service-key command, as follows:

cf create-service-key task-example-mysql EXTERNAL-ACCESS-KEY

cf service-key task-example-mysql EXTERNAL-ACCESS-KEYDoing so should give you back the credentials necessary to access the database, such as those in the following example:

Getting key EXTERNAL-ACCESS-KEY for service instance task-example-mysql as [email protected]...

{

"hostname": "...",

"jdbcUrl": "jdbc:mysql://...",

"name": "...",

"password": "...",

"port": "3306",

"uri": "mysql://...",

"username": "..."

}This should result in a response that details the access information for the respective database. The result differs, depending on whether the database service runs internally or the service is provided by a third-party. In the case of PWS and using ClearDB, we can directly connect to the database, as it is a third-party provider.

If you deal with an internal service, you may have to create an SSH tunnel by using the cf ssh command, as follows:

cf ssh -L 3306:<host_name>:<port> task-example-mysqlBy using the free MySQLWorkbench, you should see the following populated data:

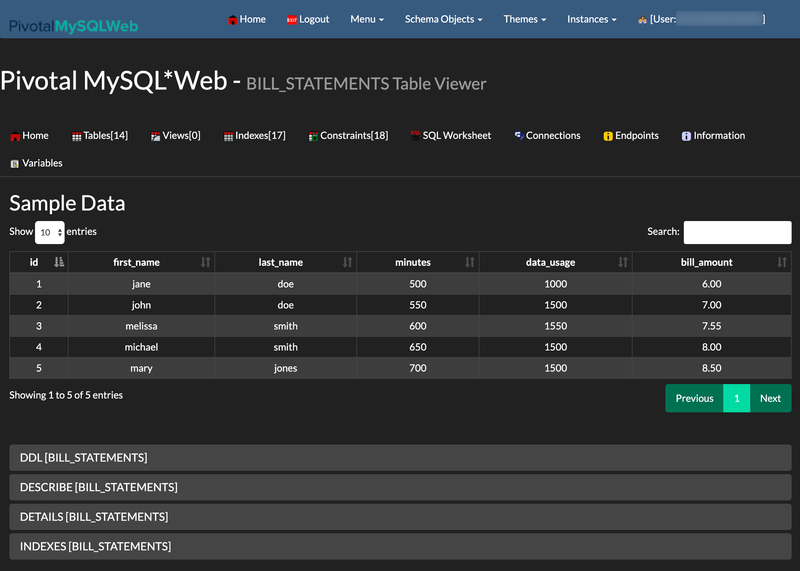

Using a Database GUI Deployed to Cloud Foundry

Another interesting option for keeping an eye on your MySQL instance is to use PivotalMySQLWeb. In a nutshell, you can push PivotalMySQLWeb to your Cloud Foundry space and bind it to your MySQL instance to introspect your MySQL service without having to use local tooling.

Check out the following project:

git clone https://github.com/pivotal-cf/PivotalMySQLWeb.git

cd PivotalMySQLWebIMPORTANT: You must first update your credentials in src/main/resources/application-cloud.yml (source on GitHub). By default, the username is admin, and the password is cfmysqlweb.

Then you can build the project, by running the following command:

./mvnw -DskipTests=true packageNext, you need to update the manifest.yml file, as follows:

applications:

- name: pivotal-mysqlweb

memory: 1024M

instances: 1

random-route: true

path: ./target/PivotalMySQLWeb-1.0.0-SNAPSHOT.jar

services:

- task-example-mysql

env:

JAVA_OPTS: -Djava.security.egd=file:///dev/urandomIMPORTANT You must specify your MySQL service to be task-example-mysql. We use that value throughout this document.

In this instance, we set the random-route property to true, to generate a random URL for the application. You can watch the console for the value of the URL. Then you push the application to Cloud Foundry by running the following command:

cf pushNow you can log in to the application and take a look at the table populated by the billrun task application. The following image shows its content after we have worked through the content of this example:

Tearing down All Task Applications and Services

With the conclusion of this example, you may also want to remove all instances on Cloud Foundry. The following commands accomplish that:

cf delete billsetuptask -f

cf delete billrun -f

cf delete pivotal-mysqlweb -f -r

cf delete-service-key task-example-mysql EXTERNAL-ACCESS-KEY -f

cf delete-service task-example-mysql -fThe important thing is that we need to delete the EXTERNAL-ACCESS-KEY service key before we can delete the task-example-mysql service itself. Additionally, the employed command flags are as follows:

-fForce deletion without confirmation-rAlso delete any mapped routes

Kubernetes

This section walks you through running the billrun application on Kubernetes.

Setting up the Kubernetes Cluster

For this example, we need a running Kubernetes cluster, and we deploy to minikube.

Verifying that Minikube is Running

To verify that Minikube is running, run the following command (shown with its output):

minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.100Installing the Database

We install a MariaDB server by using the default configuration from Spring Cloud Data Flow. To do so, run the following command:

kubectl apply -f https://raw.githubusercontent.com/spring-cloud/spring-cloud-dataflow/main/src/kubernetes/mariadb/mariadb-deployment.yaml \

-f https://raw.githubusercontent.com/spring-cloud/spring-cloud-dataflow/main/src/kubernetes/mariadb/mariadb-pvc.yaml \

-f https://raw.githubusercontent.com/spring-cloud/spring-cloud-dataflow/main/src/kubernetes/mariadb/mariadb-secrets.yaml \

-f https://raw.githubusercontent.com/spring-cloud/spring-cloud-dataflow/main/src/kubernetes/mariadb/mariadb-svc.yamlBuilding a Docker Image for the Sample Task Application

We need to build a Docker image for the billrun app.

To do so, we use Spring Boot to create the image.

You can add the image to the minikube Docker registry. To do so, run the following commands:

eval $(minikube docker-env)

./mvnw spring-boot:build-image -Dspring-boot.build-image.imageName=springcloudtask/billrun:0.0.1-SNAPSHOTRun the following command to verify its presence (by finding springcloudtask/billrun in the list of images):

docker imagesDeploying the Application

The simplest way to deploy a batch application is as a standalone Pod. Deploying batch apps as a Job or CronJob is considered best practice for production environments but is beyond the scope of this guide.

Save the following content to batch-app.yaml:

apiVersion: v1

kind: Pod

metadata:

name: billrun

spec:

restartPolicy: Never

containers:

- name: task

image: springcloudtask/billrun:0.0.1-SNAPSHOT

env:

- name: SPRING_DATASOURCE_PASSWORD

valueFrom:

secretKeyRef:

name: mariadb

key: mariadb-root-password

- name: SPRING_DATASOURCE_URL

value: jdbc:mariadb://mariadb:3306/task

- name: SPRING_DATASOURCE_USERNAME

value: root

- name: SPRING_DATASOURCE_DRIVER_CLASS_NAME

value: org.mariadb.jdbc.Driver

- name: SPRING_BATCH_JDBC_INITIALIZE-SCHEMA

value: always

- name: SPRING_SQL_INIT_MODE

value: always

initContainers:

- name: init-mariadb-database

image: mariadb:10.4.22

env:

- name: MARIADB_PWD

valueFrom:

secretKeyRef:

name: mariadb

key: mariadb-root-password

command:

[

'sh',

'-c',

'mariadb -h mariadb -u root --password=$MARIADB_PWD -e "CREATE DATABASE IF NOT EXISTS task;"',

]To start the application, run the following command:

kubectl apply -f batch-app.yamlWhen the task is complete, you should see output similar to the following:

kubectl get pods

NAME READY STATUS RESTARTS AGE

mariadb-5cbb6c49f7-ntg2l 1/1 Running 0 4h

billrun 0/1 Completed 0 10sNow you can delete the pod. To do so, run the following command:

kubectl delete -f batch-app.yamlNow log in to the mariadb container to query the BILL_STATEMENTS table.

Get the name of the mariadb pod by using kubectl get pods, as shown earlier.

Then log in and query the BILL_STATEMENTS table, as follows:

kubectl exec -it mariadb-5cbb6c49f7-ntg2l -- /bin/bash

# mariadb -u root -p$MARIADB_ROOT_PASSWORD

mariadb> select * from task.BILL_STATEMENTS;

The output should look something like the following:

| id | first_name | last_name | minutes | data_usage | bill_amount |

|---|---|---|---|---|---|

| 1 | jane | doe | 500 | 1000 | 6.00 |

| 2 | john | doe | 550 | 1500 | 7.00 |

| 3 | melissa | smith | 600 | 1550 | 7.55 |

| 4 | michael | smith | 650 | 1500 | 8.00 |

| 5 | mary | jones | 700 | 1500 | 8.50 |

To uninstall mariadb, run the following command:

kubectl delete all -l app=mariadbDatabase Specific Notes

Microsoft SQL Server

Using Spring Batch versions 4.x and older along with the Microsoft SQL Server database you may receive a deadlock from the database when launching multiple Spring Batch applications simultaneously.

This issue was reported in this issue.

One solution is to create sequences instead of tables and create a BatchConfigurer to use them.

Drop the following tables and replace them with sequences using the same names:

BATCH_STEP_EXECUTION_SEQBATCH_JOB_EXECUTION_SEQBATCH_JOB_SEQ

NOTE: Be sure to set each sequence value to be the table's current id + 1.

Once the tables have been replaced with sequences, then update the batch application to override the BatchConfigurer,

such that it will utilize its own incrementer. One example of this implementation is shown in the sections below:

Incrementer

Create your own incrementer:

import javax.sql.DataSource;

import org.springframework.jdbc.support.incrementer.AbstractSequenceMaxValueIncrementer;

public class SqlServerSequenceMaxValueIncrementer extends AbstractSequenceMaxValueIncrementer {

SqlServerSequenceMaxValueIncrementer(DataSource dataSource, String incrementerName) {

super(dataSource, incrementerName);

}

@Override

protected String getSequenceQuery() {

return "select next value for " + getIncrementerName();

}

}BatchConfigurer

In your configuration create your own BatchConfigurer to utilize the incrementer shown above:

@Bean

public BatchConfigurer batchConfigurer(DataSource dataSource) {

return new DefaultBatchConfigurer(dataSource) {

protected JobRepository createJobRepository() {

return getJobRepository();

}

@Override

public JobRepository getJobRepository() {

JobRepositoryFactoryBean factory = new JobRepositoryFactoryBean();

DefaultDataFieldMaxValueIncrementerFactory incrementerFactory =

new DefaultDataFieldMaxValueIncrementerFactory(dataSource) {

@Override

public DataFieldMaxValueIncrementer getIncrementer(String incrementerType, String incrementerName) {

return getIncrementerForApp(incrementerName);

}

};

factory.setIncrementerFactory(incrementerFactory);

factory.setDataSource(dataSource);

factory.setTransactionManager(this.getTransactionManager());

factory.setIsolationLevelForCreate("ISOLATION_REPEATABLE_READ");

try {

factory.afterPropertiesSet();

return factory.getObject();

}

catch (Exception exception) {

exception.printStackTrace();

}

return null;

}

private DataFieldMaxValueIncrementer getIncrementerForApp(String incrementerName) {

DefaultDataFieldMaxValueIncrementerFactory incrementerFactory = new DefaultDataFieldMaxValueIncrementerFactory(dataSource);

DataFieldMaxValueIncrementer incrementer = null;

if (dataSource != null) {

String databaseType;

try {

databaseType = DatabaseType.fromMetaData(dataSource).name();

}

catch (MetaDataAccessException e) {

throw new IllegalStateException(e);

}

if (StringUtils.hasText(databaseType) && databaseType.equals("SQLSERVER")) {

if (!isSqlServerTableSequenceAvailable(incrementerName)) {

incrementer = new SqlServerSequenceMaxValueIncrementer(dataSource, incrementerName);

}

}

}

if (incrementer == null) {

try {

incrementer = incrementerFactory.getIncrementer(DatabaseType.fromMetaData(dataSource).name(), incrementerName);

}

catch (Exception exception) {

exception.printStackTrace();

}

}

return incrementer;

}

private boolean isSqlServerTableSequenceAvailable(String incrementerName) {

boolean result = false;

DatabaseMetaData metaData = null;

try {

metaData = dataSource.getConnection().getMetaData();

String[] types = {"TABLE"};

ResultSet tables = metaData.getTables(null, null, "%", types);

while (tables.next()) {

if (tables.getString("TABLE_NAME").equals(incrementerName)) {

result = true;

break;

}

}

}

catch (SQLException sqe) {

sqe.printStackTrace();

}

return result;

}

};

}NOTE: The Isolation Level for create has been set to ISOLATION_REPEATABLE_READ to prevent deadlock on creating entries in batch tables.

Dependencies

It is required that you use Spring Cloud Task version 2.3.3 or above. This is because

Spring Cloud Task 2.3.3 will use a TASK_SEQ sequence if one is available.